EducateAI was a collaborative research project focused on designing meaningful AI tools for the blind and visually impaired community. As part of a six-person team, I spent two weeks at the Carroll Center for the Blind conducting ethnographic research, observing classes, and interviewing students and instructors. Through more than thirty interviews, I learned how difficult it can be for blind and visually impaired users to organize and revisit notes. Traditional note-taking tools often produce long, unsearchable audio files or complex folder systems that are frustrating to navigate. That insight led our team to design EchoMinds, an AI-powered note-taking app that uses retrieval rather than generative AI to help users efficiently find and manage their own notes.

.png)

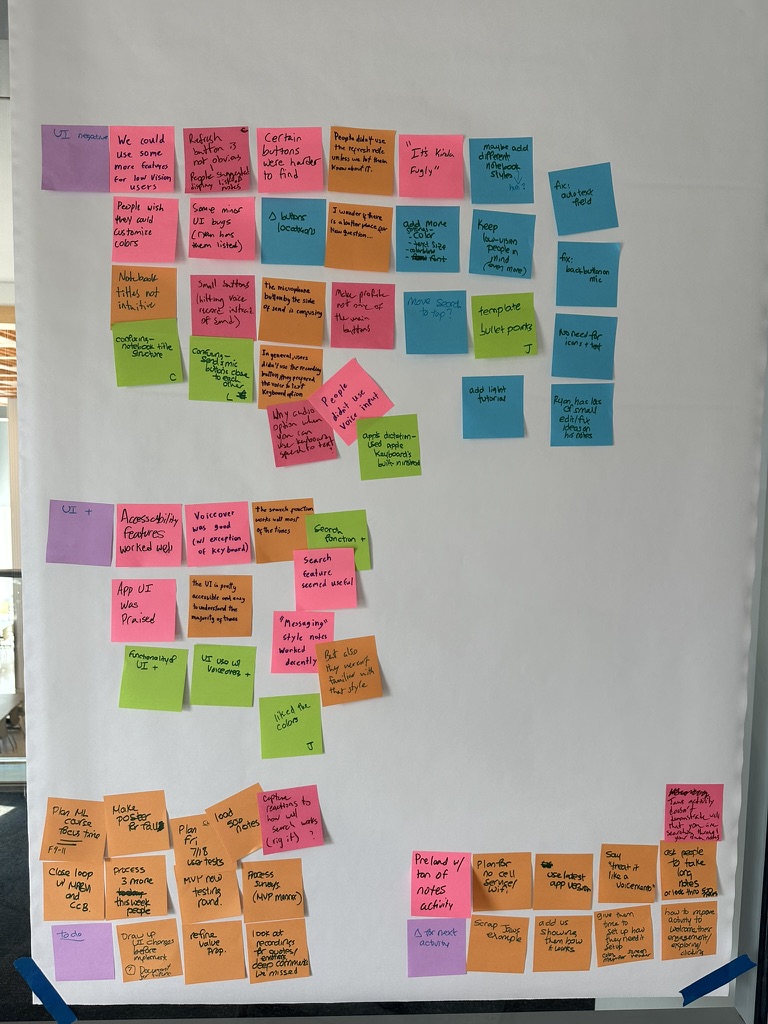

After helping form the concept, I led front end development in SwiftUI and directed all stages of UI and UX testing. My focus was on building an interface that was accessible, screen reader compatible, and consistent across views. I worked closely with other developers and researchers to translate user feedback into design updates, refining layouts, adjusting button sizing, and improving contrast for low vision users. Across three testing stages, accessibility issues dropped from 50 percent in our first cycle to zero in the second, based on feedback from over thirty participants.

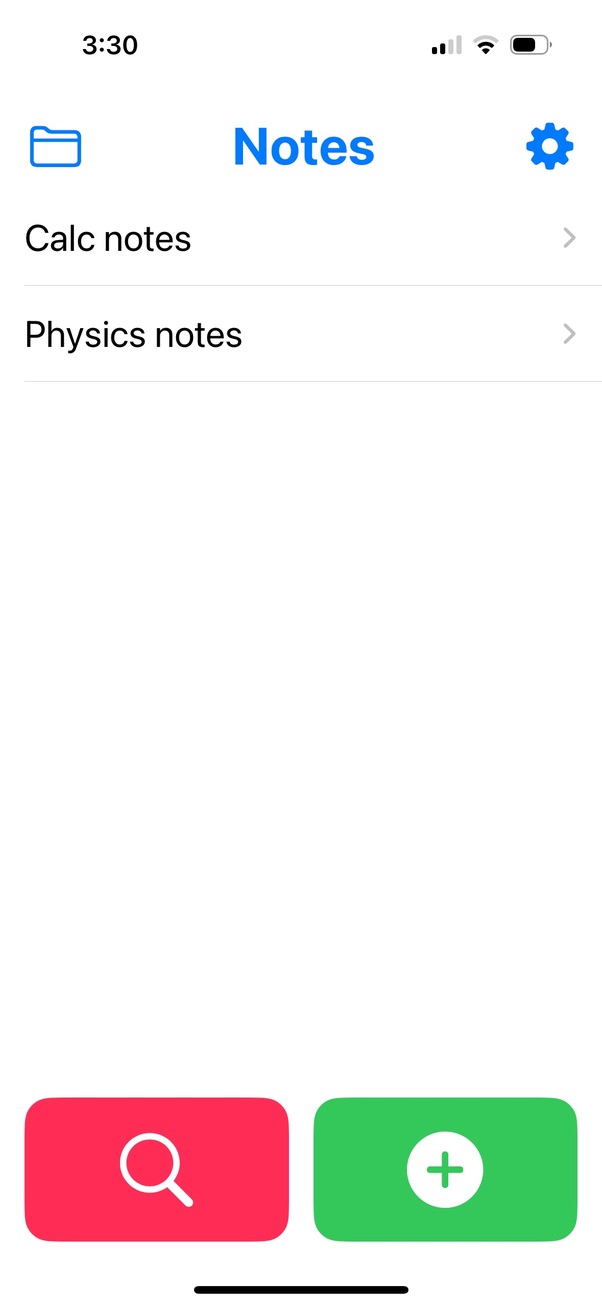

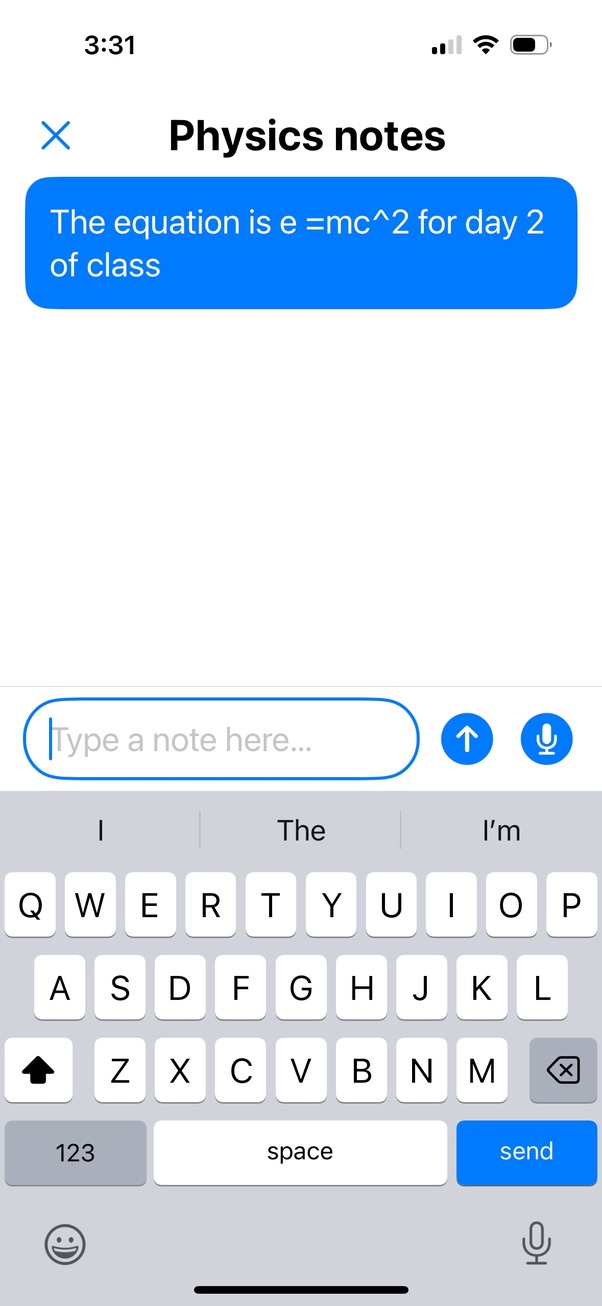

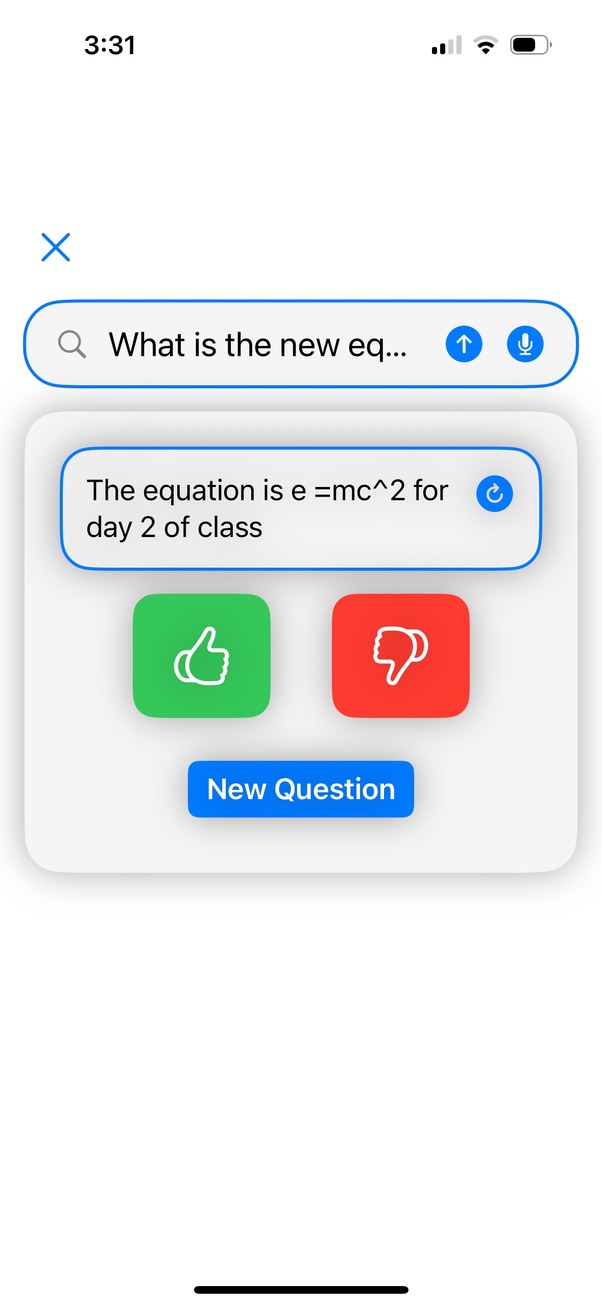

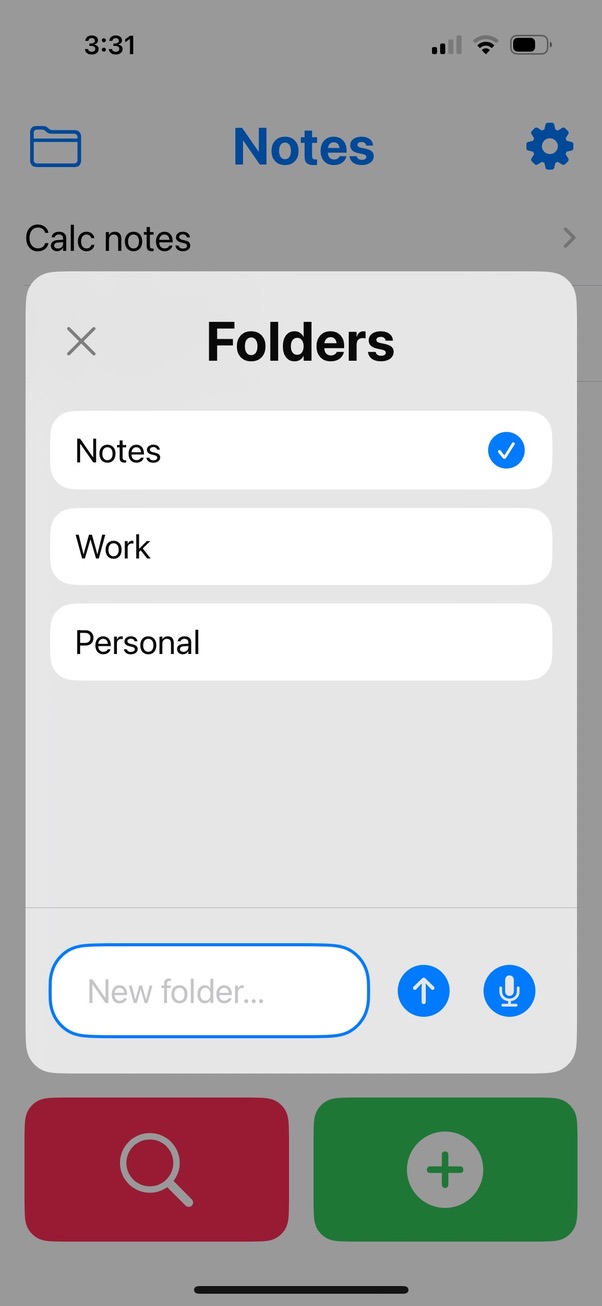

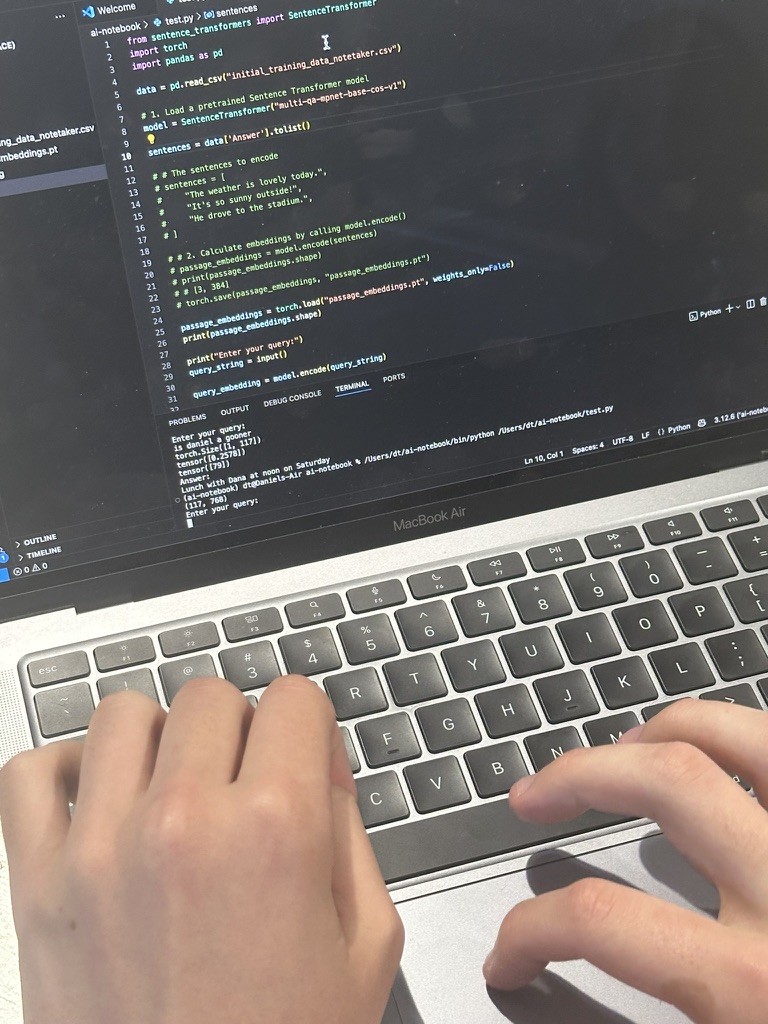

EchoMinds used a retrieval-based AI model that allowed users to search and return exact written content instead of generating new text. This approach emphasized privacy, trust, and reliability for users who preferred tools that supported rather than replaced their input. The app featured speech input, customizable color modes, and full VoiceOver integration, all organized around a simple hierarchy of folders, notebooks, and notes. These choices made the experience intuitive for both screen reader and low vision users while keeping the interface clean and approachable.

By the end of the summer, EchoMinds evolved into a fully functional prototype now available on TestFlight. The project was part of the NSF IUSE EducateAI Grant and contributed to the paper “Co-Creating Tools for Workers Who Are Blind Using Sociotechnical and Ethnographic Approaches,” presented at the 27th International ACM SIGACCESS Conference in Denver, Colorado. Working on EchoMinds taught me how inclusive research, iterative design, and technical development can come together to create technology that genuinely improves accessibility and user confidence.

Link to TestFlight - https://testflight.apple.com/join/hMXSxFrp